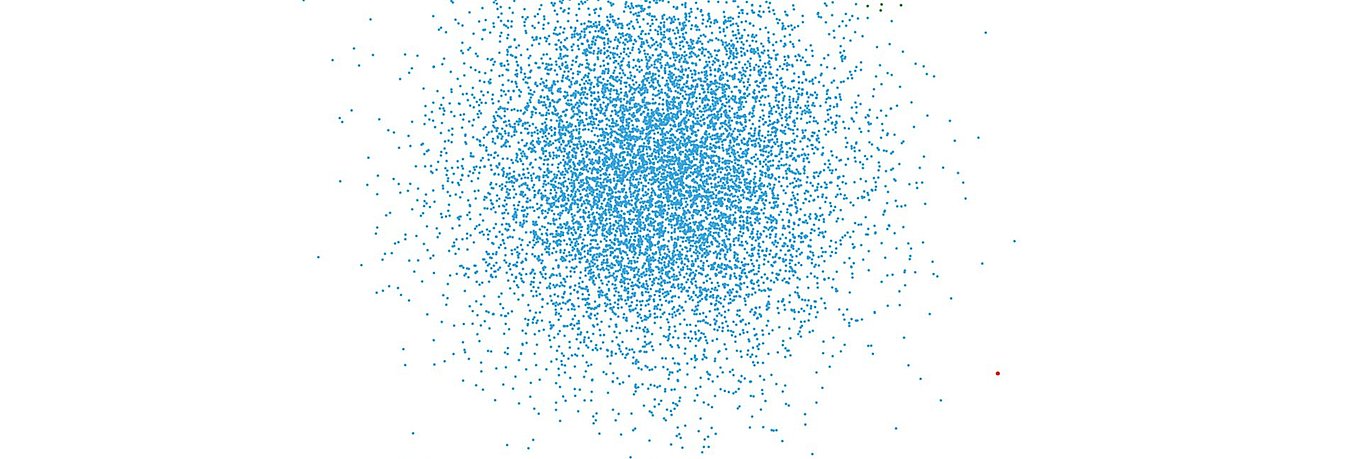

Many methods – such as one-class support vector machines or nearest neighbor distance – are based on human thinking. Breyel puts it this way: “Measure the distances between the data points and mark those that are far from all the others." If the distances between data points are not meaningfully measurable, the data scientist and his colleagues at connyun resort to methods that are not distance-based, such as isolation forests. “We typically apply different methods to a data set and then continue to use the most successful one, because no two data set are the same, and often, even the smallest details cause significant differences."

A borehole is incorrectly drilled. A component is placed incorrectly in the installation space. The material supply is interrupted. All these are typical scenarios that have a negative impact on production and, in the worst case, can paralyse it. So-called outlier detection – an efficient method for detecting and correcting errors on the basis of machine data – offers some help here. Or, even better: avoiding mistakes in the first place.